Introduction

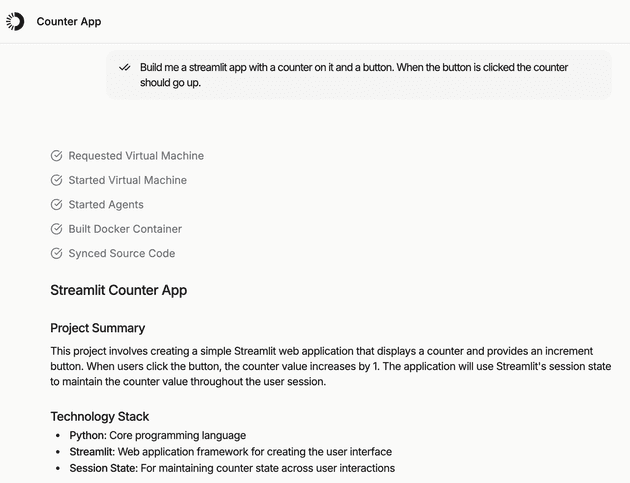

When I started at Proofs an early version Agent ran on the CTO's laptop, 18 months later it's a fully functional AI Agent that runs in Production. I brought the Agent into production on GCP by building out a Scheduler that would start a VM for the Agent to run on and manage the lifetime of that VM so we won't just leave it running forever. After a lot of prompting, RAG, and additional tooling to make the agent more reliable we did end up building an AI Agent that could reliably produce software on its own.

What is Proofs?

Proofs is an AI Software Development Agent that quickly builds Proof of Concept Applications for Sales & Solution Engineers. The Ideal Customer Profile would be a Sales Engineer at Shopify who needs to:

• Bootstrap a Shopify Hydrogen, also known as React + Next.js, app quickly.

• Tailor the app for a Brand like Nike.

• Populating the store with Nike products by scraping public data and generating mock products to fill the gap.

What makes it different?

• Proofs Plans -> Codes -> Tests in one loop so it works like Cursor's "YOLO mode" in a neatly quarantined environment.

• Runs inside of a Docker container on an isolated VM so every POC is sandboxed, reproducible, and disposable.

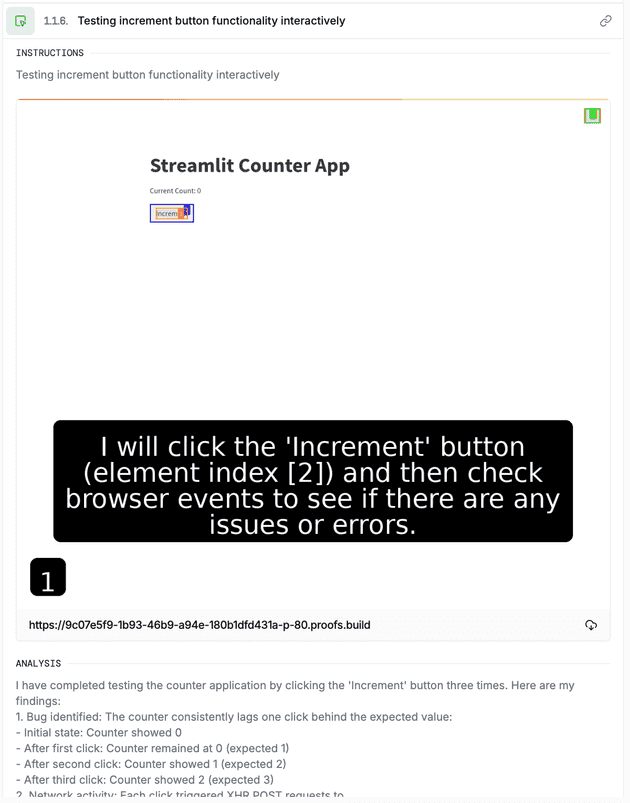

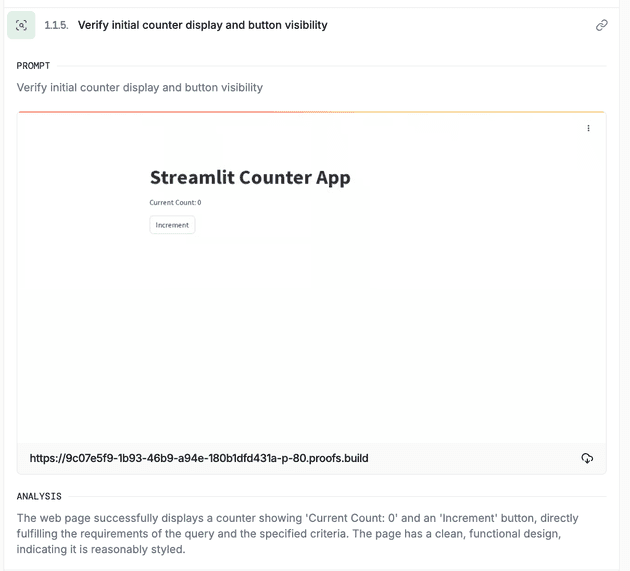

• Browser-in-the-loop developer testing - Proofs drives a real browser sessions for interactive QA via Browser Use.

• Visual Web Inspection - Captures screenshots and analyzes them to spot layout or image asset regressions automatically.

Challenges at Proofs

Building an AI Software Engineer isn't easy. The toughest hurdle has been to make the agent reliable and LLMs are anything but deterministic. We used RAG like "hints" to help push the Agent into the right direction when needed and that helped steer it towards a more stable outcome. But the biggest advantage was making "Blueprints" which were a not publicly shown way to prompt the agent in the background by showing it a previously successful plan along with the hints and details to fix any errors. With Hints + Blueprints we ultimately ended up with something that could reliably produce similar results each time while still having the project styled and populated with products specific to the prospect client needs.

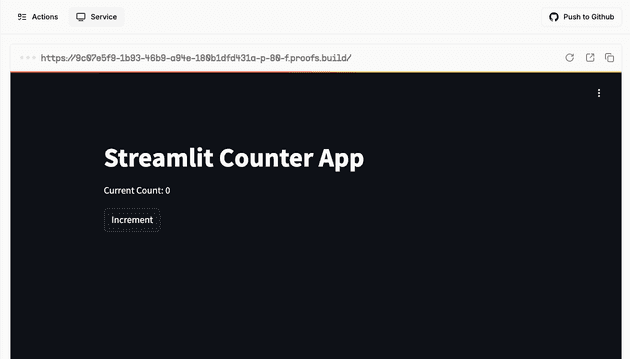

One of the non-LLM challenges I had to work on was how to let the AI show a "Preview" of what it's building while it's being built (Shown Below). So this means letting the AI Agent know what ports are free, whats already running, and detecting when something is running that should be shown on the Front + Labeling it to show it a user. What we ended up with is what you see below, where we use the unique ID of the agent with the port number in the subdomain to forward the traffic to the Docker container on a VM running in GCP. This required a GCP Load Balancer pointing to a NGINX/OpenResty instance running custom lua scripts to detect where to route the traffic to and handle some extra edge cases like re-writing localhost URLs on the page to the correct URL when the AI Agent forgets to do that.

Conclusion

Although no AI coder is perfect, Proofs’ agent is consistently reliable when given clear, well-scoped prompts. If someone tries a catch-all request—“Build me a fully automated e-commerce store that will make me a millionaire, complete with Stripe, SendGrid, and Twilio” —and can’t supply the necessary credentials, the agent understandably stalls.

When our core ICP — Sales Engineers — provide concrete requirements and the right keys, Proofs delivers a working POC every time. The AI-dev landscape has become far more crowded since we kicked off 18 months ago (think Cursor, Windsurf, Claude Code), but Proofs still stands out by focusing on full-stack Shopify Hydrogen POCs from prompt to browser-tested build.